Output

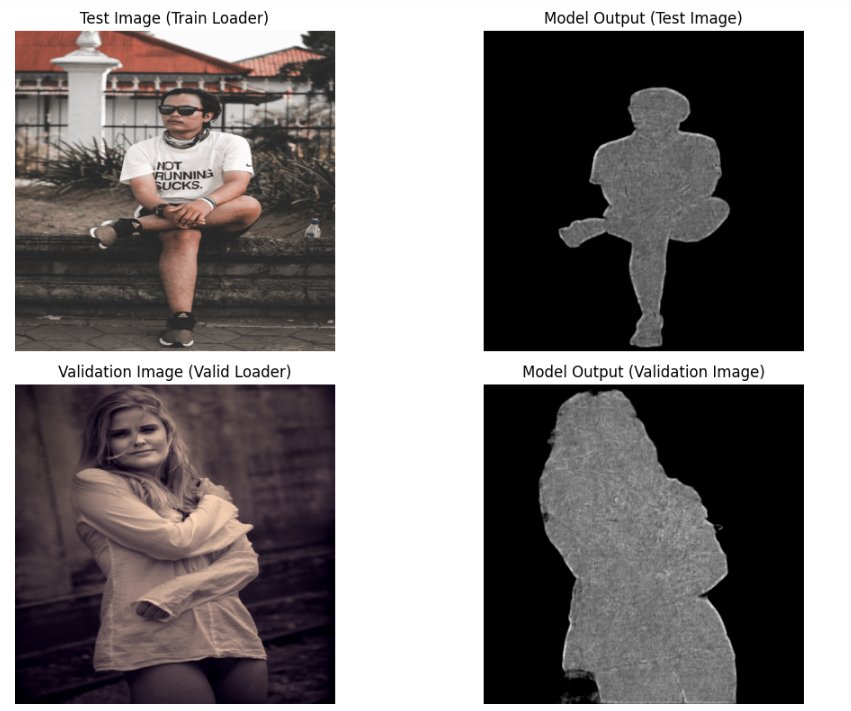

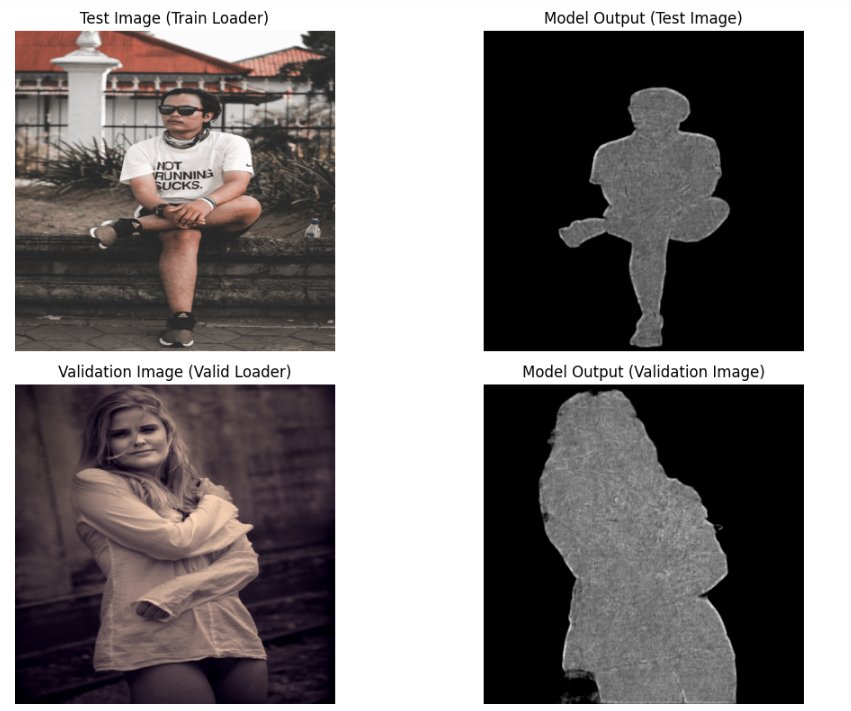

Training vs validation predictions.

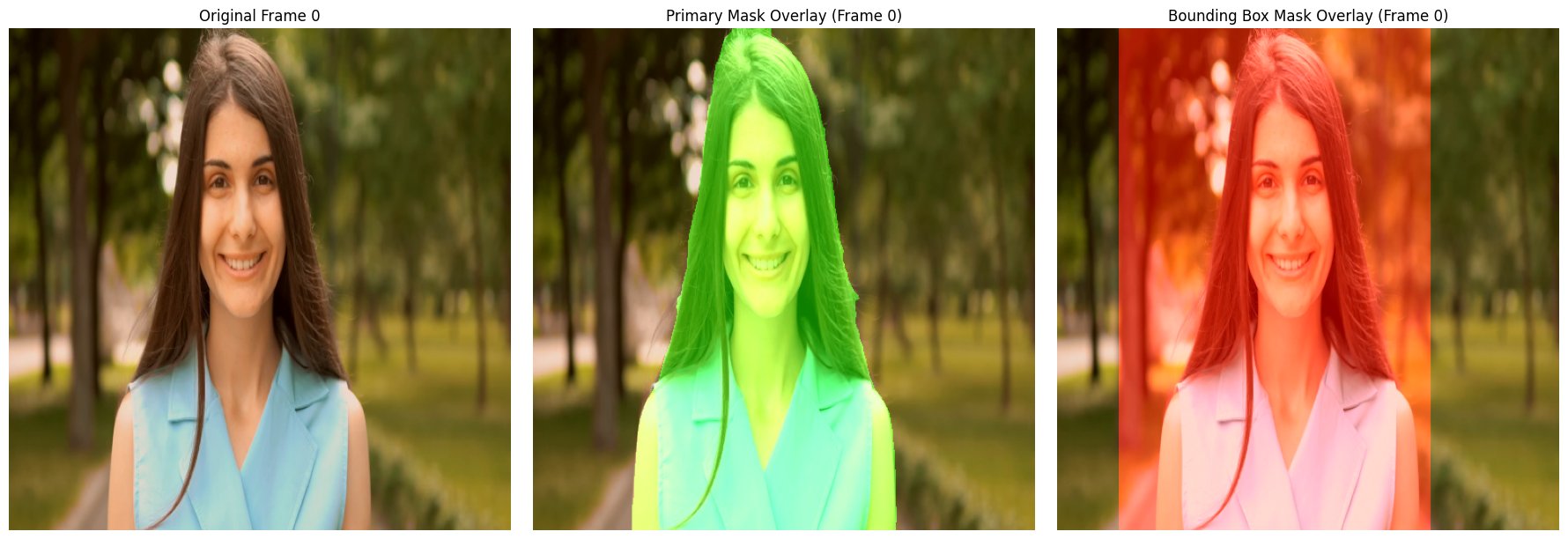

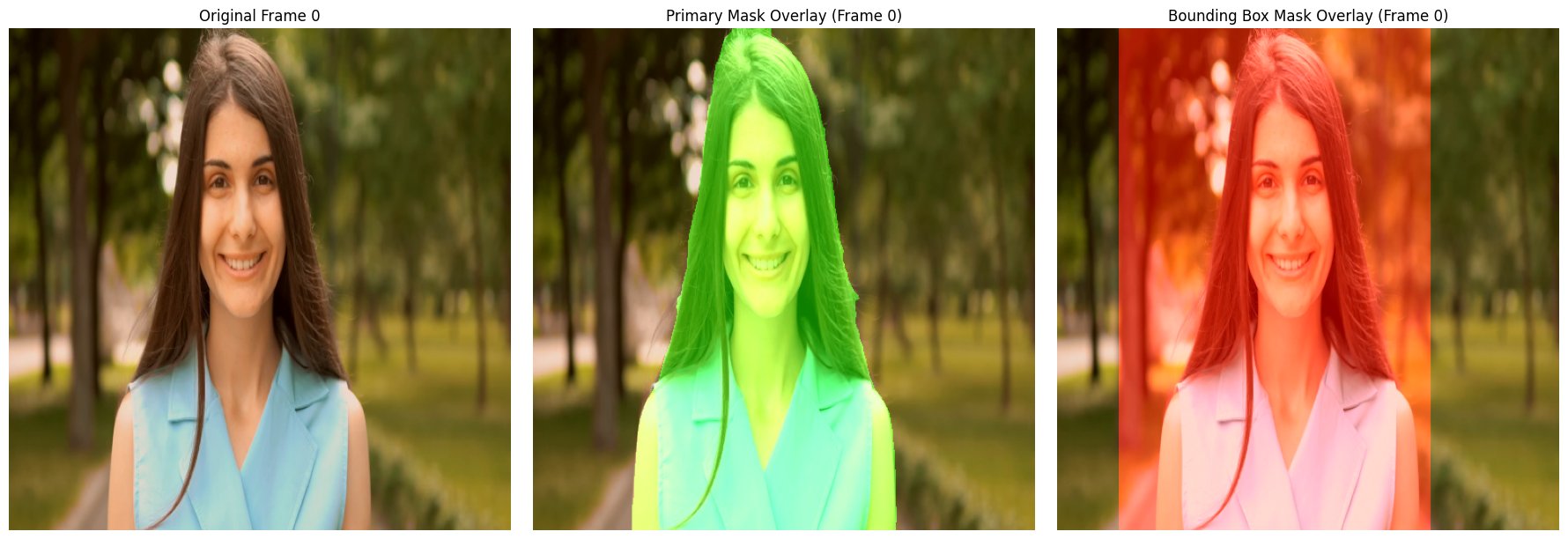

Original frame, SegNet mask (green), and YOLO bounding box (red).

A two-model pipeline that segments humans from video and outputs production-ready EXR files for compositing in Nuke.

Training vs validation predictions.

Original frame, SegNet mask (green), and YOLO bounding box (red).

The pipeline takes a video and automatically separates humans from the background frame by frame. Two models work together. YOLOv8 is an object detection model that locates humans in each frame and draws a bounding box around them. A custom SegNet then works inside that box to produce a precise per-pixel mask of the person.

SegNet is an encoder-decoder architecture designed for pixel-level segmentation. The encoder compresses the image into a compact feature representation. The decoder maps those features back to full resolution, classifying each pixel as foreground or background. Both the segmentation mask and the bounding box are written into separate channels of a multichannel EXR file, ready for compositing.

U-Net was tested first but produced edges too rough for production use. SegNet improved mask quality but would sometimes predict in empty areas of the frame. Adding YOLOv8 to constrain where segmentation runs solved this. The bounding box also doubles as a garbage mask for compositing.

Both models were trained from scratch on the Supervisely Person Segmentation dataset. The output format is multichannel EXR because that's what compositing software expects. Each channel can be pulled independently in Nuke.